Introduction

We, as users of technology, are inevitably entangled in its necropolitics. Technological monopolies have dispossessed us of the knowledge of the Web 1.0 era and locked us into systems that fuel an arms race toward ever-more-militarized futures.

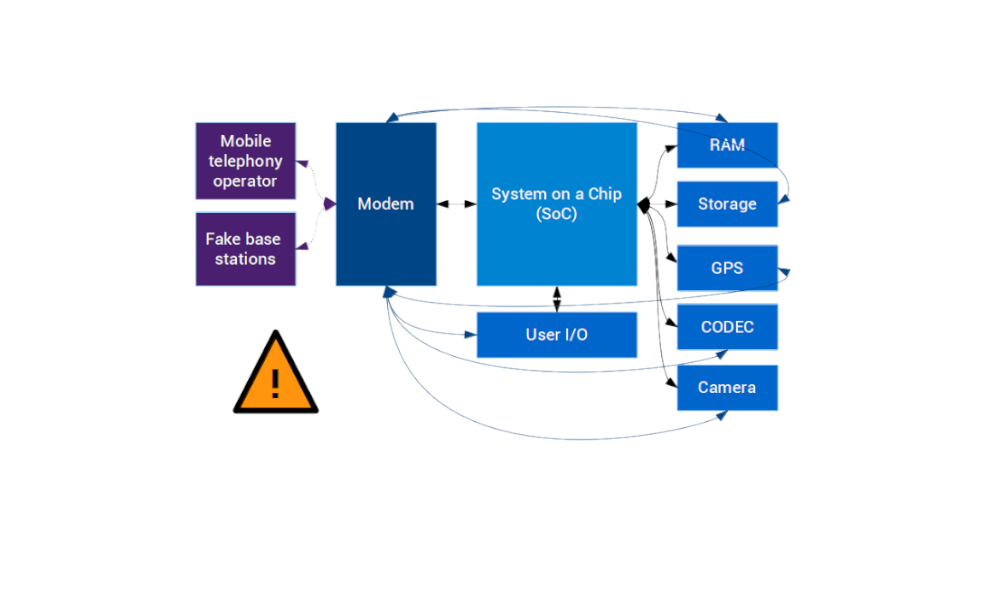

Our daily devices — smartphones, messaging apps, social media platforms, AI, and IoTs — operate on blackboxed systems that extract and profile our data not merely for consumer targeting but to train marketable surveillance systems, predictive policing tools, and, ultimately, killing machines. In this ecosystem, big tech are turning us, users, into both the weapons and the targets of a war machine.